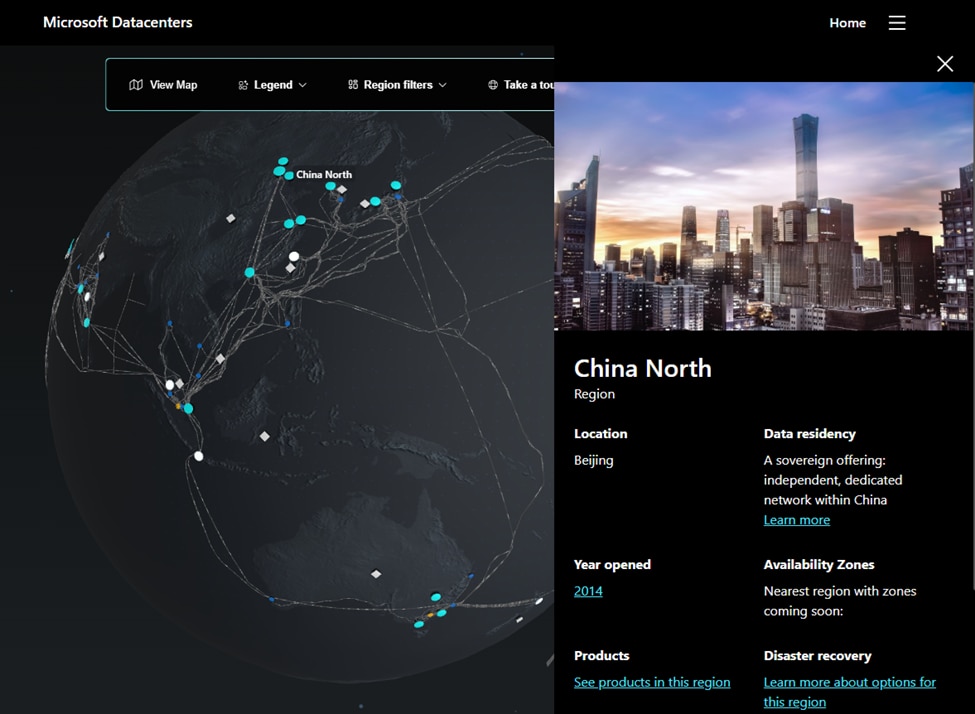

Beginning at approximately 09:37 UTC on April 23, 2024, Azure cloud operations in China suffered one of the most notable outages of the year. Users of over a dozen Azure® services, the Azure China portal, and Azure APIs were all affected. Fortunately, Microsoft teams recovered from the issue and did an excellent job communicating through their post incident review (PIR).

In this article, we’ll use the PIR to analyze the outage, exploring three key lessons that ITOps and website administrators can learn from this incident.

Scope of the Outage

Based on the PIR, the Azure China incident lasted from approximately 08:45 UTC to 11:00 UTC on April 23, 2024. Because the DNS cache does not immediately expire, Microsoft did not detect the availability issue until approximately 09:37 UTC.

Affected Azure services included:

- Data Explorer

- Container Registry

- Machine Learning

- API Management

- AI Speech

- Policy

- Kubernetes Services (AKS)

- Data Factory

- Logic Apps

- Service Bus

- IoT Hub

- Databricks

- Virtual Desktop

- Cosmos DB

- Log Analytics in Azure Monitor (intermittent latency)

- Microsoft Sentinel (intermittent latency)

Users trying to manage their Azure resources via the Azure China portal (portal.azure.cn) or using the management API may have been impacted.

Root Cause of the Outage

What did it all come down to? A nameserver configuration change.

Azure was required to conduct internal audits to comply with Chinese regulations. As part of the audits, two domains were incorrectly flagged for decommissioning. Although Microsoft has a process for DNS zone decommissioning, the management tool involved did not include Azure China DNS zones. A domino effect ensued, and the nameservers for the two domains were switched to inactive as part of the decommissioning process.

As resolvers tried to refresh their cache, they received the inactive nameserver records, which is when DNS timeouts began. Microsoft reverted to known good nameservers at 10:13 UTC, and traffic returned to normal levels by 11:00 UTC. If you would like deeper insight, watch the Microsoft incident retrospective.

Lessons to Learn from the Outage

Kudos to Microsoft for providing a detailed PIR of the outage. This example of high communication helps build customer trust and enables the rest of us to learn. Let’s look at three key lessons from the outage, then consider how you can improve your system availability and internal processes.

Lesson #1: It’s Always DNS.

The meme is true: It’s always DNS.

Ok, we’re probably exaggerating a little bit. Still, the “phone book of the internet” is also one of its biggest failure points. This is simply a reality of working with internet-facing services. Failure at the DNS level can hit everyone—from small website administrators to tech giants like Microsoft.

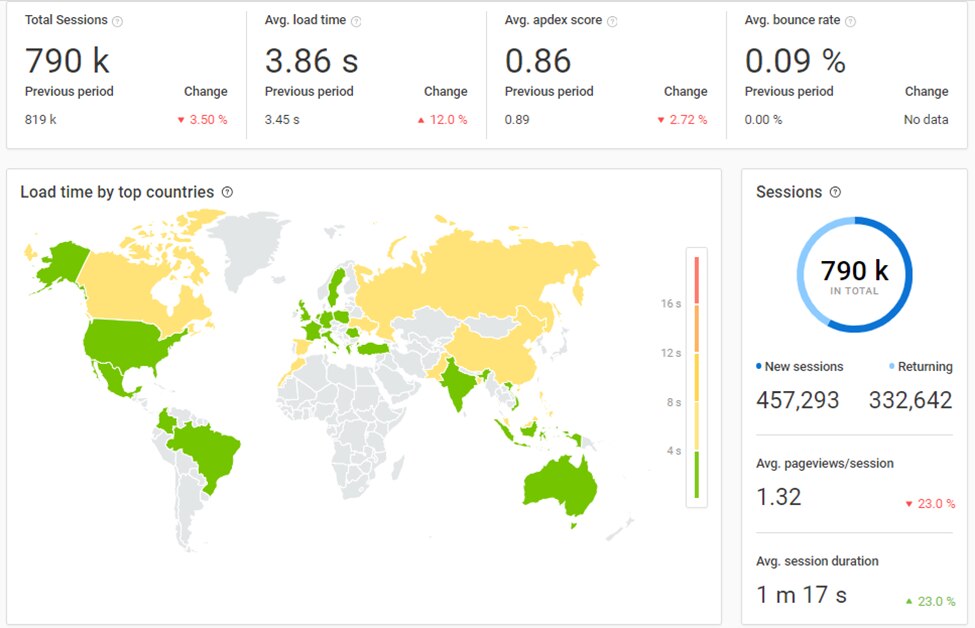

ITOps engineers need to account for this point of failure by establishing well-defined contingency plans (more on that next) and robust monitoring. However, monitoring for DNS issues can be tricky; the results of your checks can vary based on the DNS cache, time to live, and the region you’re monitoring from.

How can administrators improve their DNS monitoring?

- Monitor your sites from multiple regions. Checking connectivity from multiple locations across the globe can help ensure name resolution and connectivity are working for everyone, not just one specific monitoring station.

- Monitor your nameservers. Monitoring your nameservers directly can improve your security posture and help you detect DNS issues early. In addition to standard connectivity monitoring, monitoring for changes to key DNS records—such as NS, MX, SRV, and SOA records—can help you quickly detect unexpected changes.

Lesson #2: Test First, then Deploy.

Changes are risky. Even if you have done something dozens of times, it only takes one small change to bring down a website. Implement change controls that help reduce the risk of change and better understand what to expect when you touch production.

The level of testing required for a system depends on the potential risk introduced by the change and how critical the system is. A basic strategy for small teams is to have a staging or test environment where preproduction updates and changes can be tested before production deployments. For more sophisticated environments, you can use a blue-green or canary deployment strategy.

Of course, not all change comes with the same risk, so striking a balance between stability and speed is important. Classify your changes using categories inspired by the Information Technology Infrastructure Library (ITIL) or a similar framework. If you use ITIL as a reference, you would use these categories:

- Standard changes are documented, repeatable, and can be pre-approved.

- Normal changes aren’t urgent and should go through a change review.

- Emergency changes (for example, changes made in response to a security threat) can be fast-tracked for rapid implementation.

If ITIL is too much of a process for your team, that’s OK. Frankly, the specific framework and implementation your team adopts is less important than being intentional about defining your risk tolerance and testing your changes to meet business requirements accordingly.

Lesson #3: Have a Rollback Plan.

As we discussed in the Instagram outage post, you should assume outages will happen and prepare for them accordingly. Applying changes to production systems is risky; even if you have reasonable procedures in place (which Microsoft did), things can go wrong.

Make sure you’re prepared for this by having and testing a rollback plan for any updates you push to production. A solid rollback plan can be the difference between an outage measured in minutes versus hours.

Here are three tips to help you get your rollback plans right:

- Define your RTOs and RPOs. When it comes to your backup and disaster recovery plan, there’s a tradeoff between the speed/coverage and the cost. Be intentional about setting recovery time objectives (RTOs) and recovery point objectives (RPOs), and then use those to inform decisions about how far you need to go as you create and test your plan.

- Have a backup strategy. There are plenty of quality backup strategies you could implement. As a first step, just pick one and execute it. You’ll sleep easier and thank yourself if disaster strikes. And remember, if you haven’t tested your recovery plan, don’t assume your backups are reliable. Regularly test your ability to recover and meet your RTOs and RPOs.

- Strive for updates that have easy rollbacks. Not every rollback depends on a full data backup. Code changes and website upgrades may be able to roll back without impacting your data at all. If you’re responsible for your upgrade/downgrade process, aim to limit the pain of rolling back if something goes wrong.

How Pingdom can Help Improve your Website Monitoring

Pingdom® is a simple and powerful availability monitoring platform that can help teams monitor their sites from around the globe. With support for a wide variety of checks, including DNS checks, Pingdom empowers ITOps and website administrators to detect and respond to connectivity issues quickly.

If you’d like to see how Pingdom can help you improve your website monitoring, sign up for a free (no credit card required) 30-day trial today!