As you most likely know, we recently experienced two outages. This post is an update on what we are currently doing to avoid this from happening again and also what we will be doing next.

As you most likely know, we recently experienced two outages. This post is an update on what we are currently doing to avoid this from happening again and also what we will be doing next.

To make it clear, we already had redundancy in software, machines and network, which were to take over if anything went wrong with our system. Unfortunately, this didn’t work as expected since our issue was one step further down in the chain.

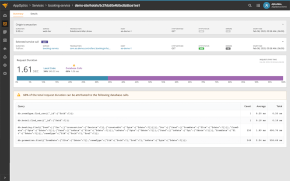

The issue we have been facing is related to our virtualized infrastructure and the storage systems that it uses. So this is what our entire team is focusing on in our short term and upcoming fixes.

Short term improvements

Last year, we made big investments in a virtualized infrastructure, which is used by most parts in Pingdom. This will be changed to have physical servers that our most important services are running on. All services will also continue to have redundant servers to avoid single-point of failures.

These new physical servers have already been ordered and should be arriving shortly by prioritized flight freight so we can start using them. Regarding the virtualized infrastructure we have already changed so that the servers it is running on are using a local storage instead of a storage system it connects to. This change in itself should avoid the same trouble from happening again. The physical servers represent just one extra safety measure; we’d like to think of it as using both belt and suspenders.

Also included in our short term improvements is to improve parts of our deployment automation and flow to become faster and more flexible. The above improvements will be done within two weeks.

Coming improvements

In the longer term (starting in a few weeks and for the coming months), we’re planning many software changes to our core systems. In terms of high availability, the number one improvement is going from one data center to multiple data centers where alerting, our control panel, and other important services will not be affected if one of the data centers goes down.

Currently we haven’t had any issues directly related to our data center, but this will add an extra layer of protection. Scaling to multiple data centers can be hard and costly, but once a company reaches a certain size it makes sense. We now think we are starting to reach this size where we will look into this and invest in using a secondary data center.

Murphy’s law applies especially to operations

We already knew that about Murphy’s law, that the hard part is seeing all possible scenarios that can happen before they actually happen. But the single most important thing is to learn from your mistakes and make sure the same thing doesn’t happen again.

In this case, we were already on it, but still it happened twice in one week and three times in total, despite optimizations. This is our fault, and for this we are truly sorry. Rest assured that we are doing everything in our power to prevent it from happening again.