If Facebook has its way (and it usually does), over the coming years a ton of websites and online services will become part of the open graph that Facebook is promoting, with Facebook firmly planted in the middle. The concept is very interesting, and the potential for this web of data from a wide variety of sources is enormous. You could say that Facebook will tie all our information, and the whole web, together.

There’s just one problem (two, if you count privacy): When the web becomes “interconnected” with Facebook, it also means that when Facebook breaks, the web breaks. In short, Facebook becomes a single point of failure for the web.

Can Facebook deliver on reliability?

Any site that relies on Facebook’s API to function properly will be in trouble if Facebook has any service issues (it’s happened before and it will happen again). This is true today, and the more integrated services become with Facebook, the more noticeable it will become.

Since the sites connecting to Facebook will be using the new Facebook Graph API, the reliability (i.e. uptime) and performance of this API will be critical.

A couple of days ago we set up monitoring of this API (with our uptime monitoring service) to see how it will perform over time. We’ll definitely follow up with a deeper performance and reliability analysis when this monitoring has been running for a while, but we already have some interesting results to share:

Geography and performance

On the internet, not all locations are created equal. Since Facebook is hosted in the United States, accessing the Facebook API will be faster in North America than other places of the world. Case in point, when we examined our monitoring results we could clearly see how accessing the API from Europe was significantly slower than from North America, all thanks to the overhead of distance. This is a direct effect of Facebook being hosted in the United States.

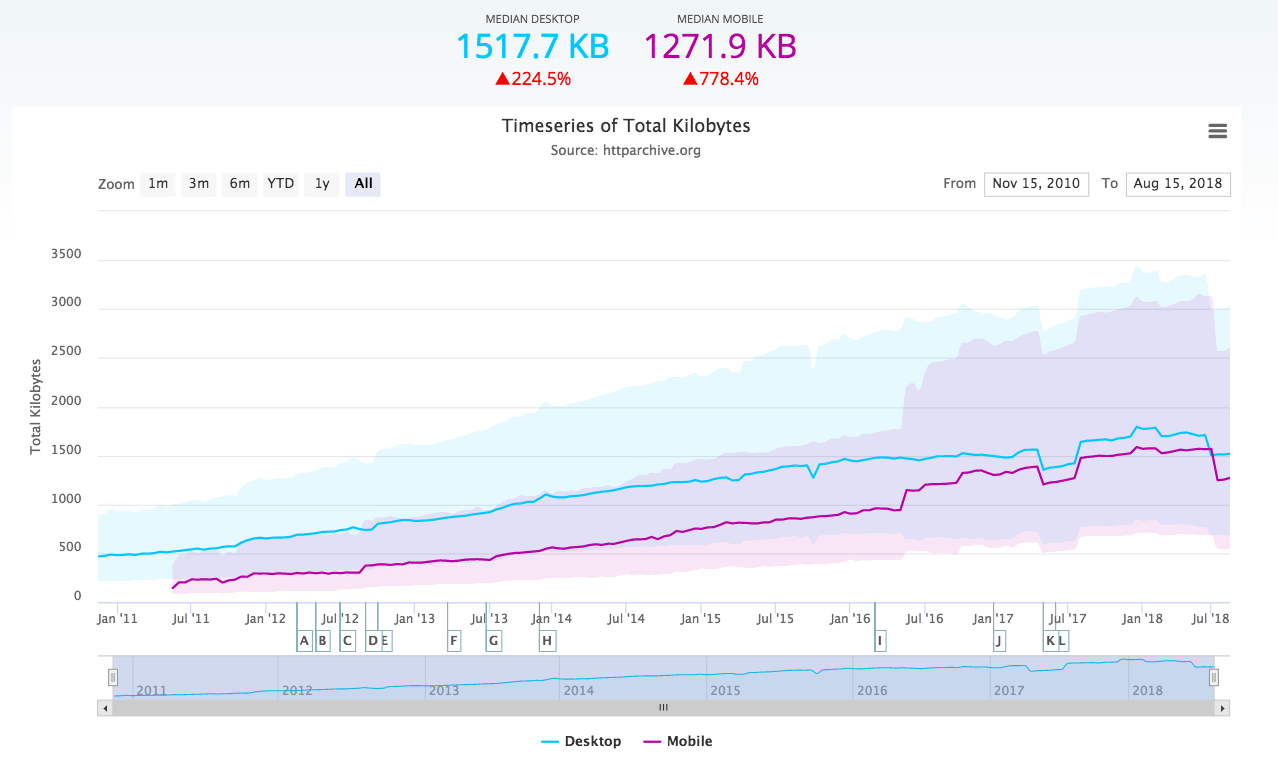

Here is the average time to complete a Facebook Graph API request. The chart is based on more than 3,000 requests from multiple locations in Europe and North America, spread over the last couple of days:

Above: Based on monitoring from multiple locations in North America and Europe between April 27-29, tests performed once per minute.

An average 340 ms in North America versus 569 ms in Europe is a pretty big difference, although as we mentioned, since Facebook is hosted in the United States, this should be expected. Nevertheless, Facebook API requests are on average 67% slower in Europe than North America, and this is something that we’ll simply have to live with (at least for now).

Uptime, though, proved impeccable (100%), although since we have only monitored the API for a few days so far it’s too short a period to draw any relevant conclusions about reliability. Regardless of the results, we feel pretty safe to say that unless a website is intrinsically connected to Facebook and has to depend on it, it’s probably a good idea to make sure that the site works ok even if Facebook is down or has performance issues.

Dare we trust Facebook?

The Open Graph is a brilliant concept and the potential for what can be done using this graph of knowledge and social connections (because that’s what it comes down to) is huge. And that potential goes way beyond customized web pages.

Some things benefit greatly from having a central repository, a central connection mechanism, and this is certainly one of those. If Facebook should be that entity is another matter, but regardless of who is in control, having one central point if failure can cause a great deal of problems once something goes wrong.

This central point of failure may be an argument just as strong as any privacy concern for decentralizing the open graph (and APIs used to access it). If it reaches the popularity many expect, it will be such a critical element of the web experience that it probably shouldn’t be controlled by any one company or service. On the other hand, it’s kind of what Google did with Search.